Unable to Qualify Lead

23 Jul 2016 | Dynamics CRM | Dynamics CRM Online

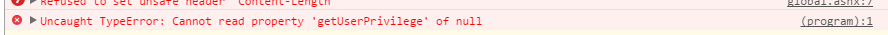

I was investigating an issue in a customized Dynamics CRM environment where the "Qualify" ribbon button on the Lead record had mysteriously stopped working.

Qualification from the grid and the organization service API appeared to work as expected, and this only seemed limited to the ribbon button. Given that the page was not even posting back to the server on clicking the button, I suspected it was failing at the front end. I fired up the chrome developer tools and sure enough was greeted with the following error on the console:

Checklist for Enabling Quick Create from Sub-grid

17 Apr 2016 | Dynamics CRM | Dynamics CRM Online

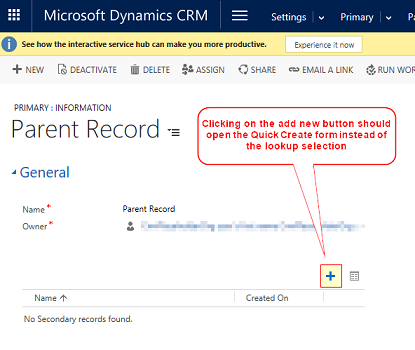

There have been a few instances where I've wanted to launch a quick create a record directly from a sub-grid add-button. That is, without having an extra step of bringing down the lookup view and then clicking the + New button.

While the required steps are covered between these two great posts - here and here, I have summarized the checklist here for convenience.

Non-searchable Primary Key Field Breaks Pre-filtering

16 Apr 2016 | Dynamics CRM | Dynamics CRM Online

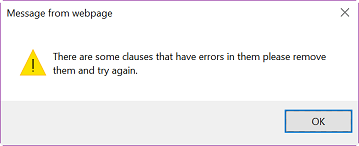

I recently implemented a custom FetchXml based SSRS report that was designed to be run against a filtered list of accounts. The accounts were to be determined by the user at the time of execution, hence the report was setup to use pre-filtering on the accounts entity.

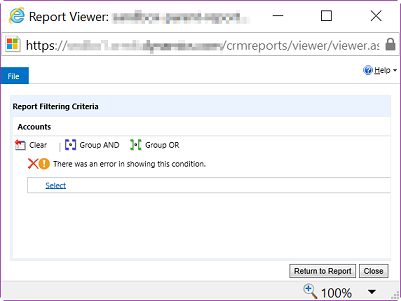

The report worked fine except in one environment where the user was greeted with the following warning message:

Dismissing the warning opened up the Report Filtering Criteria dialog with the following error:

There was an error in showing this condition

And additional information:

The condition was referencing the field accountid. The field has been removed from the system or is not valid for advanced find.

Base64 and Maximum Attachment Size in Dynamics CRM

15 Apr 2016 | Dynamics CRM | Dynamics CRM Online

Microsoft Dynamics CRM provides out-of-the-box functionality to store and associate small file attachments against an entity record. This is achieved via the annotation entity - it is similar to any other entity except that it stores meta data as well as the actual content of the file attachment within it.

As CRM is not designed to be a file store, it imposes some limitations on the size of attachments that can be uploaded. Recent versions of the product, including 2016, defaults this size to 5120Kb which can be adjusted to a maximum hard limit of 32768Kb.

However, there is one caveat with this limitation - CRM stores the byte stream in a base64 encoded format, this means that there would be an increase in the final saved file size.

Strategy for Maintaining CRM Solutions in Team Foundation Server using Microsoft.Xrm.Data.PowerShell

21 Jan 2016 | Dynamics CRM | Team Foundation Server | ALM

I've come across a few strategies on the internet on achieving this goal, with each one having its pros and cons. This post describes my own attempt at setting up a development workflow that can be integrated with the team builds.

This implementation at its core revolves around the Microsoft.Xrm.Data.PowerShell module and the Solution Packager tool provided with the official SDK. I have minimized the use of 3rd party libraries and have intentionally excluded the use of any visual studio extensions or helper tools.

Setting up the Tools

Installing Microsoft.CrmSdk.CoreTools

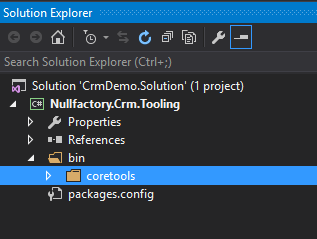

Let's start off by creating a "tooling" project that would act as a hosts for the tools and scripts involved in the process.

- Create a class library project to host the tools using for building the packages. I named mine

Nullfactory.Crm.Tooling. Install the

Microsoft.CrmSdk.CoreToolsnuget package by running the following command in the package manager console:Install-Package Microsoft.CrmSdk.CoreTools -Project Nullfactory.Crm.ToolingThis nuget package includes the

SolutionPackager.exetool.

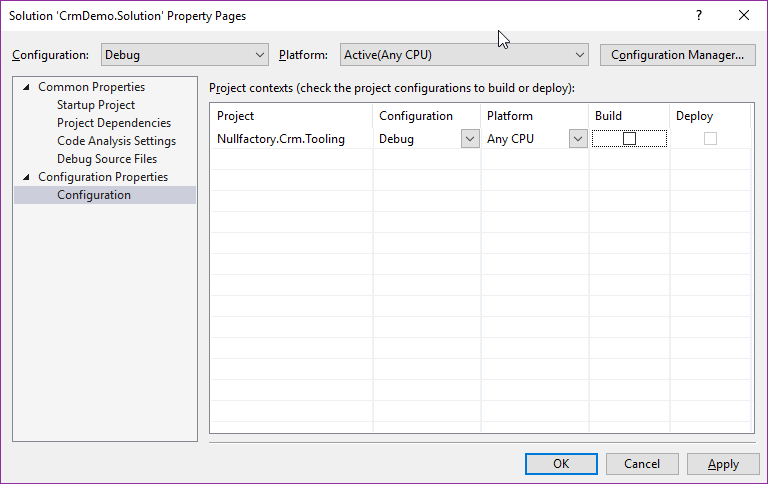

Next, clean up the project by removing the default

Class1.csfile as well as theDebugandReleasewithin thebinfolder.Update the solution configurations to not build this project. Do this by navigating to the solution property pages >

Configuration Propertiesand un-ticking the check box against the build column.

Disabling Recurring and OnDemand Web Jobs within a Deployment Slot

20 Dec 2015 | Azure

I've come to realize that things can get a bit tricky when working with slot deployments and webjobs. I learned the hard way that stopping a slotted Web App does not stop the web jobs it hosts. This means that I can't just stop all my website slots and expect the related jobs to automatically shutdown as well. Bummer.

Okay, so I am thinking maybe I'll just disable the individual jobs for each of the slots? Not much luck on that front either as the Azure portal only provides a stop option for continuous jobs and not for the recurring and OnDemand jobs.

This limits us to a few possible solutions:

Strategy for Updating a SQL Server Database Schema via SSDT Delta Script

25 Nov 2015 | SQL Server | SQL Server Data Tools

This posts talks about the high level steps I went through in order to get the SQL Server related components ready for automation in a project I worked on recently.

This project uses SQL Server Data Tools (SSDT) project in order to maintain the database schema in source control. Its output - the Data-tier Application Component Packages (DACPAC) gets deployed into the appropriate target environment via a WebDeploy package. And considering that the solution was designed as an Entity Framework (EF) database first approach, code first migrations were not a viable upgrade strategy.

Here are the steps I followed in order to bring the production environment up-to-date:

Setting up a CDN to Stream Video via Azure Storage

11 Oct 2015 | Azure | CDN | Azure Storage Accounts

I needed to setup an Azure Content Delivery Network (CDN) in order to stream some video files stored in Azure Blob Storage. Sounds simple enough right? Well, yes for the most part, but I did hit a few hurdles along the way. This post would hopefully help me avoid them the next time.

Create the Storage Account

Something I found out after the fact was that CDN endpoints currently only support classic storage accounts. So the first order of business is to create a classic storage account either via old portal or using a resource group manager template.

Another thing I found out is that, at the time of writing, classic storage accounts cannot be made under the 'East US' location. The closest alternative was 'East US 2' and worked fine; I guess its something worth considering if you wanted to co-locate all your resources.

Next, create a container within storage account - the container would host the files that would be served by the CDN. It can be created manually via the old portal or even through visual studio. Ensure that container access type is set to Public Blob.

Upgrade the Storage Account to a Newer Service Version

The first time I tried to tried to stream a video, it did not work as expected; stream was very choppy. It turns out that the service version that got set on the storage was not the latest. Read more here, and here.

So the next step is update the storage account to the latest version in order to take advantage of the improvements. This can be done using the following code:

var credentials = new StorageCredentials("accountname", "accountkey");

var account = new CloudStorageAccount(credentials, true);

var client = account.CreateCloudBlobClient();

var properties = client.GetServiceProperties();

properties.DefaultServiceVersion = "2013-08-15";

client.SetServiceProperties(properties);

Console.WriteLine(properties.DefaultServiceVersion);

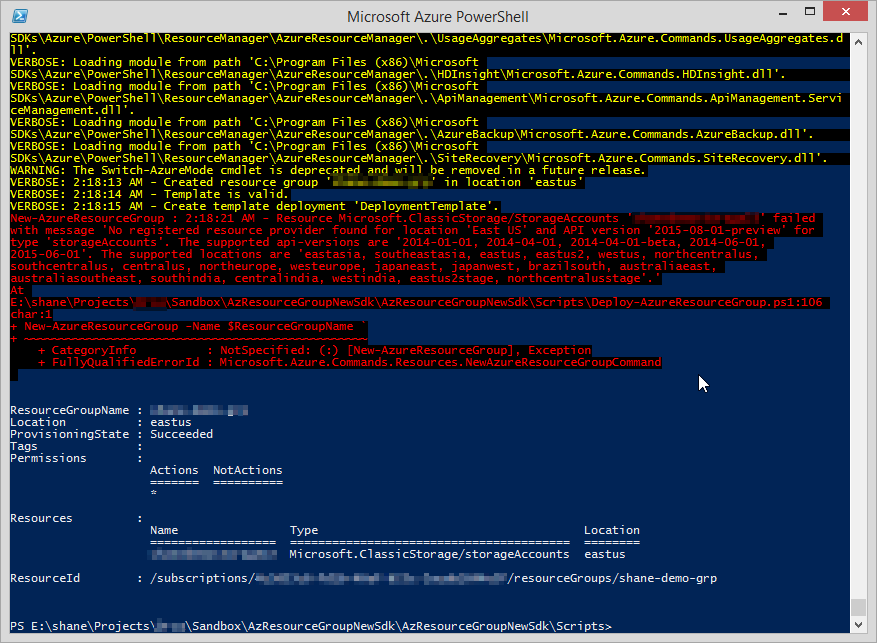

Deploying a Azure Classic Storage Account using Azure Resource Manager

10 Oct 2015 | Azure | Deployment | Azure Storage Accounts

I've been working on Azure Resource Group templates quite a bit over the last few weeks. While it has been a pleasant experience overall, I ran into some hurdles the other day while attempting to figure out how to create a Microsoft.ClassicStorage/StorageAccounts using the Azure Resource Manager(ARM) API.

The latest version (2.7 at the time of writing) of Azure SDK GUI tools for visual studio were not particularly helpful in generating the required json, but thankfully this post pointed me in the right direction. And after a little bit of fiddling I find that 2015-06-01 appears to be last supported apiVersion that works with classic storage.

Here's the final script I used to create a classic storage container:

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"PrimaryStorageName": {

"type": "string"

},

"PrimaryStorageType": {

"type": "string",

"defaultValue": "Standard_LRS",

"allowedValues": [

"Standard_LRS",

"Standard_GRS",

"Standard_ZRS"

]

},

"PrimaryStorageLocation": {

"type": "string",

"defaultValue": "East US",

"allowedValues": [

"East US",

"West US",

"West Europe",

"East Asia",

"South East Asia"

]

}

},

"variables": {

},

"resources": [

{

"name": "[parameters('PrimaryStorageName')]",

"type": "Microsoft.ClassicStorage/StorageAccounts",

"location": "[parameters('PrimaryStorageLocation')]",

"apiVersion": "2015-06-01",

"dependsOn": [ ],

"properties": {

"accountType": "[parameters('PrimaryStorageType')]"

}

}

],

"outputs": {

}

}

Publishing Assemblies into the GAC without GacUtil

31 Aug 2015 | Deployment

I constantly find myself googling this code snippet - its nice to keep handy:

[System.Reflection.Assembly]::Load("System.EnterpriseServices, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a")

$publish = New-Object System.EnterpriseServices.Internal.Publish

$publish.GacInstall("c:\temp\publish_dll.dll")